How to write great key results

The key results you choose will make or break your OKRs. Learn how to pick the right ones for your team, company, and product.

Key results tell us if we’re getting closer to achieving our goals.

They seem simple, but writing great key results can be hard.

The perfect key result

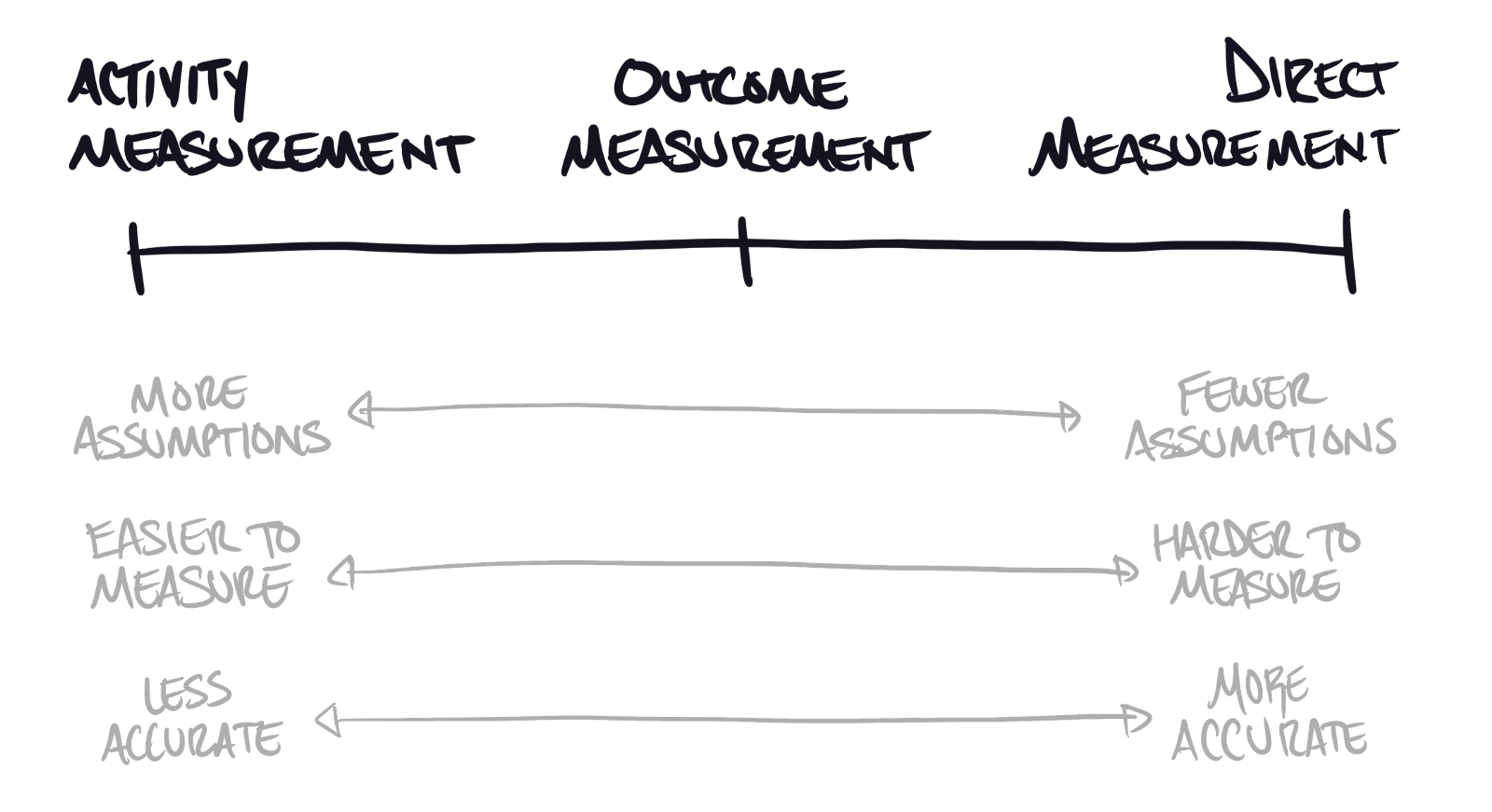

Since key results measure our progress towards an objective, the best key result is a direct measurement of that objective itself. For example, say we have an objective to “Make our website more resilient”. That’s a laudable goal and ideally, there’s a number on a dashboard somewhere that says “Website Resiliency: 12”. Perfect! Our key result might be “Increase Website Resiliency from 12 to 17”.

That’s a great metric. It’s clear, concise, and verbatim what we’re trying to achieve with our objective. The only problem is it’s impossible to measure. Site resiliency is multi-dimensional and trying to bundle the different aspects of that goal into a single number doesn’t work. It’s like trying to measure “engagement” or “satisfaction” or “alignment” — fantastic things to aim for but you can’t calculate them directly.

Direct measurement is wonderful when it works, but it almost never does.

The metrics are just too big.

Instead, we have to find proxies. Closer by, more concrete instantiations of the concept that are easier to measure.

Outcome-based key results

The best way to break down a direct-measurement key result is to think about what you’ll see as that metric changes.

For example, let’s go back to the objective “Make our website more resilient”. As that happens you might see other surrounding metrics start to change too. Maybe the percentage of unplanned downtime changes. Maybe your ability to recover from a broken deploy improves. Maybe the number of geographic locations your servers are hosted on goes up.

Measuring “resiliency” directly may be impossible, but there’s a constellation of metrics surrounding that concept that are much easier to measure.

Outcome-focused metrics are a great balance. They give you a more concrete measurement while being abstract enough to keep flexibility in the projects you can choose. But those benefits come at a cost.

In making the jump from direct measurement to outcome-based measurement, you have to introduce assumptions. Measuring unplanned downtime assumes that that’s the source of your site’s lack of resiliency. It assumes that improving one will improve the other.

Depending on your business, that may be an assumption you’re willing to make. But keep in mind the more assumptions you incorporate the easier it is to get off track. Many teams have found themselves achieving their key results but failing their objectives. Invalid assumptions are often to blame.

Depending on your team, company, or project, outcome-based key results may be difficult or impossible to measure.

For example, if you’re just starting work on a new product you may not have the monitoring in place to track unplanned downtime. That doesn’t mean it’s not important — in another situation, it would be a great key result — but if you can’t measure it now then it’s useless.

Teams that find themselves in this position only have one option. They have to go one level deeper.

Activity-based key results

You may not be able to measure an outcome, but you can always measure the actions that surround that outcome.

For example, if unplanned downtime is out of reach, you can still measure the number of automated tests that you’re running before shipping software to production. It’s not quite as good — it’s very possible to write a lot of automated tests that don’t help your uptime — but it’s better than nothing.

Much like the shift from direct KRs to outcome-based KRs, the shift to activity-based KRs adds assumptions. You’re assuming that automated testing leads to less unplanned downtime. And you’re assuming that less unplanned downtime leads to better resiliency. If those assumptions are correct then you’re in good shape. But if they’re wrong then you may do a lot of work that has very little impact.

The bigger and more insidious risk with activity-based key results is that you’re right, but weakly. If you set automated test volume as a key result then you’ll get more automated tests. And if your assumptions are correct, then your site will become more resilient. But what if automated testing is only a small problem? What if the bigger issue is something else like the size of your database?

Activity-based key results lock you into trying to solve the problem in one, specific way. What you gain in clarity, you lose in flexibility and precision.

Find where you fit

Putting it all together

“What makes a key result good?” is one of the most common questions people ask about using OKRs. And unfortunately, it’s a tough one to answer. A great key result in one situation might be a terrible one in another. The most important thing is finding the right balance between accuracy, precision, clarity, and the ability to measure.

Start by looking for a way to measure the objective directly. There’s a slim chance you can, but if it’s feasible for your goal and your time-frame then go for it. Next, think about what observable outcomes you can track that go along with your objective. Try to find some metrics that are accessible, easy to understand, and that depend on low-risk assumptions. If all else fails and you can’t find any good outcome-based metrics, then consider what behaviors you can track instead. Set reasonable targets for those activities and start to track them, but don’t forget to share the assumptions your making and the ways it could go wrong. The more people looking out for issues the better.

Writing great key results is a hard skill to master but once you do the difference is dramatic.

If you have any questions, comments, or thoughts please share reach out on Twitter. I’m always happy to chat.